25th August - 6th September 2018

In this newsletter from Etymo, you can find out the latest development in machine learning research, including the most popular datasets used, the most frequently appearing keywords and the important research papers associated with the keywords, and the most trending papers in the past two weeks.

If you and your friends like this newsletter, you can subscribe to our fortnightly newsletters here.

1398 new papers

Etymo added 1398 new papers published in the past two weeks. These newly published papers on average have 3.7 authors for each paper.

The bar diagram below indicates the number of papers published each day from some major sources, including arXiv, DeepMind, Facebook and etc. This diagram also indicates the pattern of publishing machine learning research papers.

Fortnight Summary

There is still a big focus on computer vision (CV) in research from the papers published in the last two weeks, as reflected on the popularity of the CV datasets used. The interests in CV can be subdivided into handwriting recognition, autonomous driving, face detection, general object classification, and the handling of low-pixel or blurred images. There are also some good developments on natural language processing (NLP), as there are more research on Twitter analysis and intelligent answers to questions.

In other areas of machine learning, there are interesting idaes about spiking neural networks (Deep Learning in Spiking Neural Networks), and using hierarchical CNN for travel speed prediction (Travel Speed Prediction with a Hierarchical Convolutional Neural Network and Long Short-Term Memory Model Framework). There are also new perspective of data selection (Denoising Neural Machine Translation Training with Trusted Data and Online Data Selection) and reflection on the more common practices of feature selection (Feature Selection: A Data Perspective).

The trending of the last two weeks represents a balanced development in different areas of machine learning: a new framework for object representations for any unsupervised or supervised problem with a co-occurrence structure (Wasserstein is all you need); a study on the effectiveness of self-attention networks for NLP (Why Self-Attention? A Targeted Evaluation of Neural Machine Translation Architectures); and a novel hierarchical framework for semantic image manipulation (Learning Hierarchical Semantic Image Manipulation through Structured Representations).

Popular Datasets

Computer vision is still the main focus area of research. There has been an increase in papers using autonomous driving datasets and this is the first time we have seen the SQuAD dataset near the top.

| Name | Type | Number of Papers |

|---|---|---|

| MNIST | Handwritten Digits | 37 |

| ImageNet | Image Dataset | 33 |

| CIFAR-10 | Tiny Image Dataset | 19 |

| KITTI | Autonomous Driving | 17 |

| COCO | Common Objects in Context | 14 |

| CelebA | Celebrity Faces | 10 |

| Tweets | 8 | |

| SQuAD | Questions and Answers | 8 |

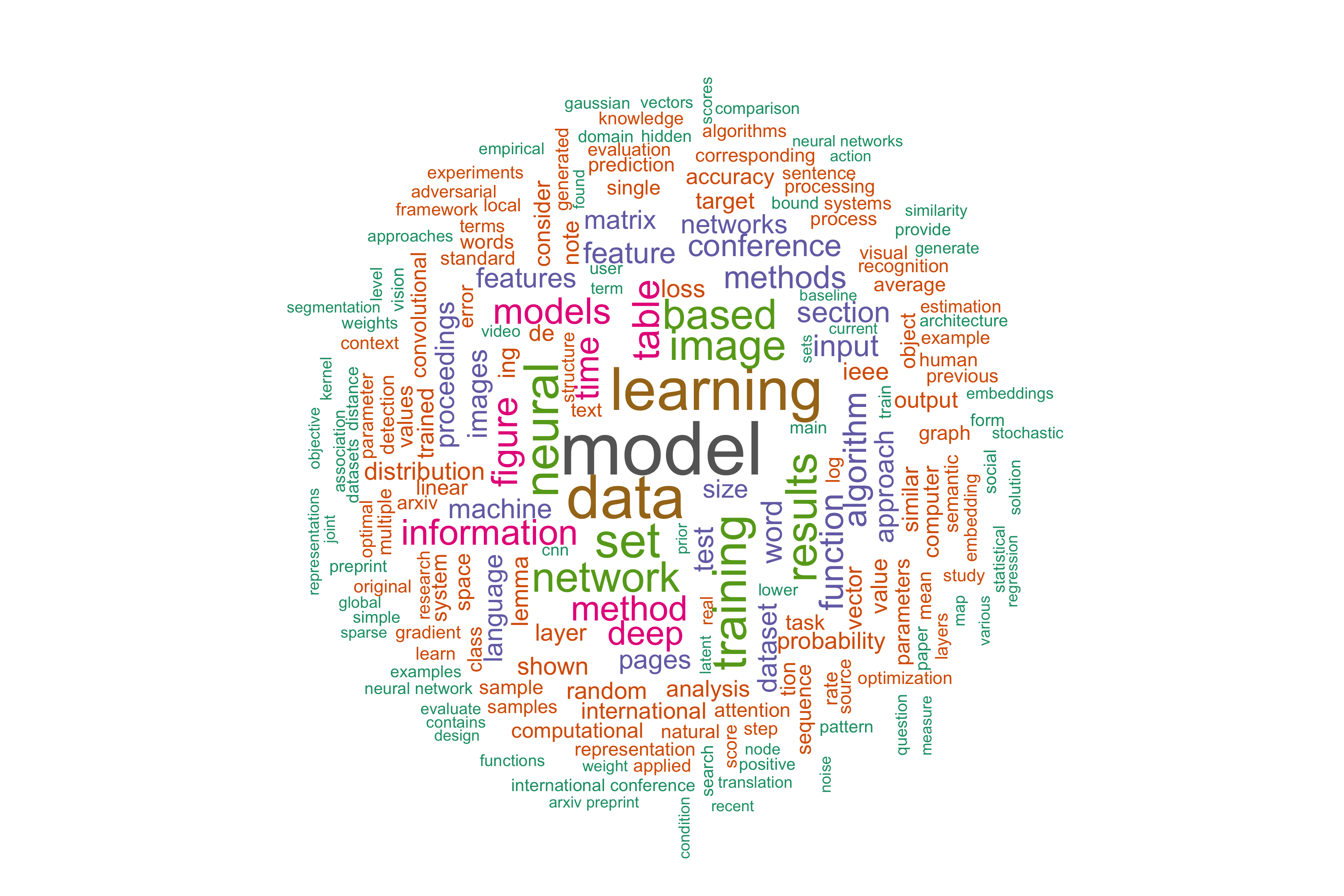

Frequent Words

"Model", "learning", "data" and "neural" are the most frequent words again. Below is a word cloud of all keywords from the last two weeks papers:

The top two papers associated with each of the key words are:

- Model:

- Backdoor Embedding in Convolutional Neural Network Models via Invisible Perturbation

- Travel Speed Prediction with a Hierarchical Convolutional Neural Network and Long Short-Term Memory Model Framework

- Discriminative Learning of Similarity and Group Equivariant Representations

- The Complexity of Learning Acyclic Conditional Preference Networks

- Feature Selection: A Data Perspective

- Denoising Neural Machine Translation Training with Trusted Data and Online Data Selection

- Deep Learning in Spiking Neural Networks

- Quantum enhanced cross-validation for near-optimal neural networks architecture selection

Learning:

Data:

Neural:

Etymo Trending

Presented below is a list of the most trending papers added in the last two weeks.

-

Why Self-Attention? A Targeted Evaluation of Neural Machine Translation Architectures:

The 10 page paper compares RNNs, CNNs and self-attention networks on subject-verb agreement and word sense disambiguation on the Lingeval97 and ContraWSD datasets. -

Wasserstein is all you need:

The 15 page paper proposes a new framework for building unsupervised representations of individual objects based on Wasserstein distances and Wasserstein barycenters. They evaluate their method on 9 datasets: BLESS, EVALution, Lenci/Benotto, Weeds, Henderson6, Baroni, Kotlerman, Levy and Turney. -

Learning Hierarchical Semantic Image Manipulation through Structured Representations:

The 18 page paper from University of Michigan and Google Brain implements a novel hierarchical framework for semantic image manipulation. The key to the hierarchical framework is to employ a structured semantic layout, or bounding boxes of objects within an image, as an intermediate representation. They test their method on the Cityscapes and ADE20K datasets.